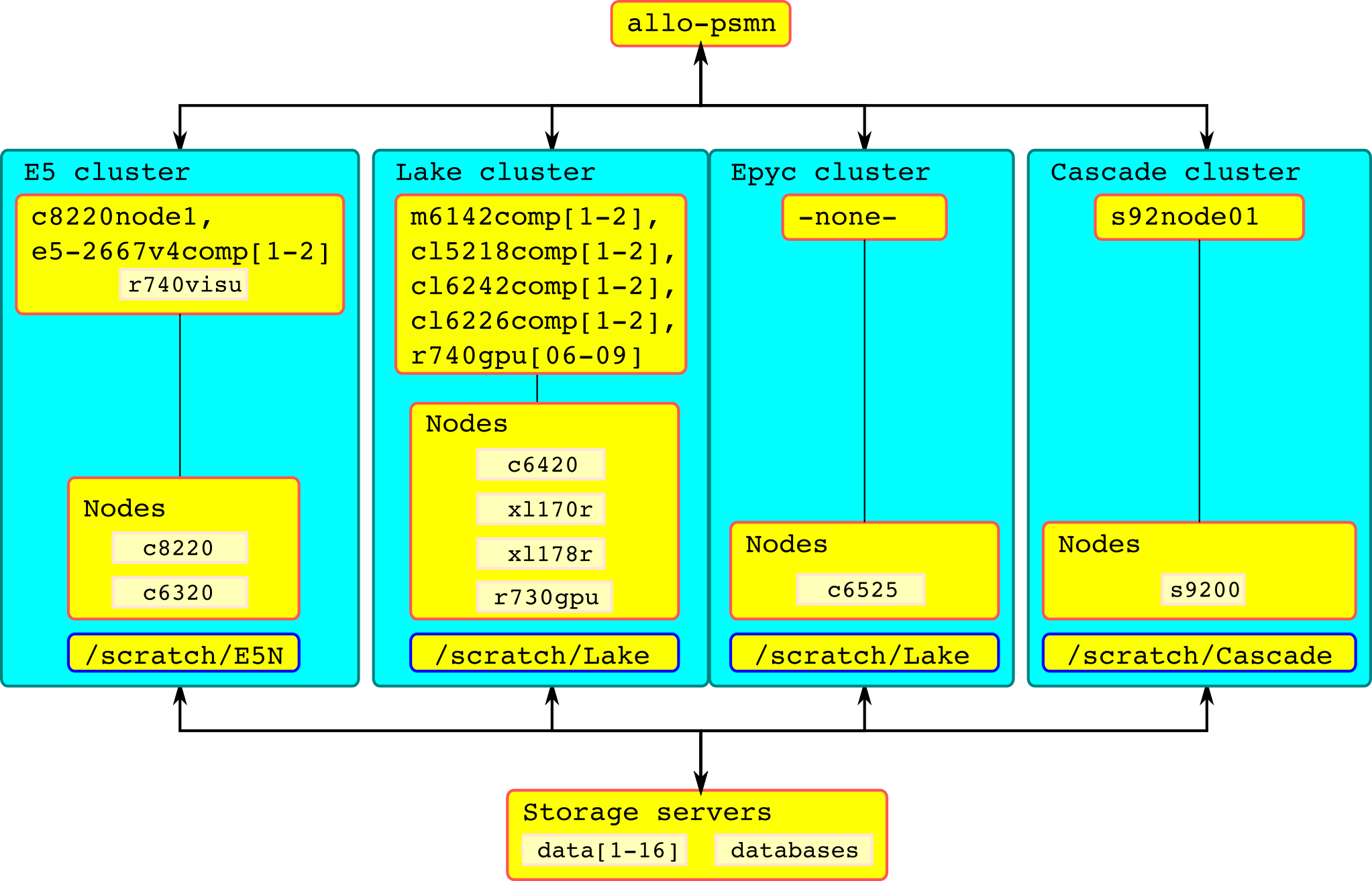

Clusters/Partitions overview

Fig. 35 A view of PSMN clusters

Note

Clusters are the hardware assembly (including dedicated network, etc). Partitions are logical divisions within clusters.

Cluster/Partition E5

This partition is open to everyone.

login nodes |

CPU Model |

cores |

RAM |

ratio |

infiniband |

GPU |

local scratch |

|---|---|---|---|---|---|---|---|

c8220node1 |

E5-2670 0 @ 2.6GHz |

16 |

64 GiB |

4 GiB/core |

56 GiB/s |

||

E5-2670 0 @ 2.6GHz |

16 |

256 GiB |

16 GiB/core |

56 GiB/s |

/scratch/ssd |

||

E5-2650v2 @ 2.6GHz |

16 |

256 GiB |

16 GiB/core |

56 GiB/s |

/scratch/ssd |

||

E5-2667v2 @ 3.3GHz |

16 |

128 GiB |

8 GiB/core |

56 GiB/s |

|||

E5-2667v2 @ 3.3GHz |

16 |

256 GiB |

16 GiB/core |

56 GiB/s |

|||

e5-2667v4comp[1-2] |

E5-2667v4 @ 3.2GHz |

16 |

128 GiB |

8 GiB/core |

56 GiB/s |

||

E5-2667v4 @ 3.2GHz |

16 |

256 GiB |

16 GiB/core |

56 GiB/s |

|||

E5-2697Av4 @ 2.6GHz |

32 |

256 GiB |

16 GiB/core |

56 GiB/s |

Hint

Best use case: training, sequential jobs, small parallel jobs (<32c)

E5 scratch(general purpose scratch) is available, from all nodes of the partition, on the following path:

/scratch/E5N

Nodes

c8220node[41-56,169-176]have a local scratch on/scratch/ssd, reserved to photochimie group (120 days lifetime residency,--constraint=local_scratch),Choose ‘E5’ environment modules (See Modular Environment). See Using X2Go for data visualization for visualization servers informations.

Cluster Lake

Partition E5-GPU

This partition is open to everyone.

Hint

Best use case: GPU jobs, training, sequential jobs, small parallel jobs (<32c)

login nodes |

CPU Model |

cores |

RAM |

ratio |

infiniband |

GPU |

local scratch |

|---|---|---|---|---|---|---|---|

r730gpu01 |

E5-2637v3 @ 3.5GHz |

8 |

128 GiB |

16 GiB/core |

56 GiB/s |

RTX2080Ti |

/scratch/Lake |

Choose ‘E5’ environment modules (See Modular Environment),

Partition E5-GPU has access to

Lake scratchs, and has no access to/scratch/E5N(see Partition Lake below).

Partition Lake

This partition is open to everyone.

Hint

Best use case: medium parallel jobs (<384c)

login nodes |

CPU Model |

cores |

RAM |

ratio |

infiniband |

GPU |

local scratch |

|---|---|---|---|---|---|---|---|

m6142comp[1-2] |

Gold 6142 @ 2.6GHz |

32 |

384 GiB |

12 GiB/core |

56 GiB/s |

||

Gold 6142 @ 2.6GHz |

32 |

384 GiB |

12 GiB/core |

56 GiB/s |

/scratch/disk/ |

||

Gold 5118 @ 2.3GHz |

24 |

96 GiB |

4 GiB/core |

56 GiB/s |

|||

cl6242comp[1-2] |

Gold 6242 @ 2.8GHz |

32 |

384 GiB |

12 GiB/core |

56 GiB/s |

||

cl5218comp[1-2] |

Gold 5218 @ 2.3GHz |

32 |

192 GiB |

6 GiB/core |

56 GiB/s |

||

cl6226comp[1-2] |

Gold 6226R @ 2.9GHz |

32 |

192 GiB |

6 GiB/core |

56 GiB/s |

Lake scratchsare available, from all nodes of the partition, on the following paths:

/scratch/

├── Bio

├── Chimie

├── Lake (general purpose scratch)

└── Themiss

‘Bio’, ‘Chimie’ scratchs are meant for users of respective labs and teams,

‘Themiss’ scratch is reserved to Themiss ERC users,

‘Lake’ is for all users (general purpose scratch),

Nodes

c6420node[049-060],r740bigmem201have a local scratch on/scratch/disk/(120 days lifetime residency,--constraint=local_scratch),Choose ‘Lake’ environment modules (See Modular Environment). See Using X2Go for data visualization for visualization servers informations.

Partition Lake-bigmem

This partition is NOT open to everyone, open a ticket to gain access via QoS.

Hint

Best use case: large memory jobs, sequential jobs

login nodes |

CPU Model |

cores |

RAM |

ratio |

infiniband |

GPU |

local scratch |

|---|---|---|---|---|---|---|---|

none |

Gold 6226R @ 2.9GHz |

32 |

1,5 TiB |

46 GiB/core |

56 GiB/s |

/scratch/disk/ |

Lake scratchsare available (see Partition Lake above), and a local scratch on/scratch/disk/(120 days lifetime residency,--constraint=local_scratch).

Partition Lake-flix

This partition is open to everyone, but has a preemption mode (see Preemption mode), in which jobs with high priority will requeue jobs with low priority. Ideal for burst campaigns (lots of short jobs) and workflows with multiples jobs.

Hint

Best use case: sequential jobs, medium parallel jobs (<384c)

login nodes |

CPU Model |

cores |

RAM |

ratio |

infiniband |

GPU |

local scratch |

|---|---|---|---|---|---|---|---|

cl6242comp[1-2] |

Gold 6242 @ 2.8GHz |

32 |

384 GiB |

12 GiB/core |

56 GiB/s |

Lake scratchsare available (see Partition Lake above)

Cluster/Partition Epyc

This partition is open to everyone.

Hint

Best use case: large parallel jobs (>256c)

login nodes |

CPU Model |

cores |

RAM |

ratio |

infiniband |

GPU |

local scratch |

|---|---|---|---|---|---|---|---|

none |

EPYC 7702 @ 2.0GHz |

128 |

512 GiB |

4 GiB/core |

100 GiB/s |

N/A |

None |

There is no login node available in the Epyc partition at the moment. Use an Interactive session for builds/tests,

Epyc partition has access to

Lake scratchs(see Partition Lake above). There is no specific environment modules (yet).

Cluster Cascade

Partition Cascade

This partition is open to everyone.

Hint

Best use case: sequential jobs, large parallel jobs (>256c)

login nodes |

CPU Model |

cores |

RAM |

ratio |

infiniband |

GPU |

local scratch |

|---|---|---|---|---|---|---|---|

s92node01 |

Platinum 9242 @ 2.3GHz |

96 |

384 GiB |

4 GiB/core |

100 GiB/s |

N/A |

None |

Cascade scratch(general purpose scratch) is available, from all nodes of the partition, on the following path:

/scratch/

├── Cascade (general purpose scratch)

└── Cral

‘Cral’ scratch is meant for CRAL users and CRAL teams,

Choose ‘Cascade’ environment modules (See Modular Environment).

Partition Cascade-flix

Hint

Best use case: sequential jobs, large parallel jobs (>256c)

Same as partition Cascade above, this partition is open to everyone, but with a preemption mode (see Preemption mode), in which jobs with high priority will requeue jobs with low priority. Ideal for burst campaigns (lots of short jobs) and workflows with multiples jobs.